I recently had to copy a static website for a client that no longer had access to their back-end. I used a program called HTTrack Website Copier to download the entire site locally, so that I could then upload it to a new server location. It only comes up once a year or so that I need to “download” a site (without back-end access). For the simple static site I used HTTrack Website Copier on… the software worked nicely!

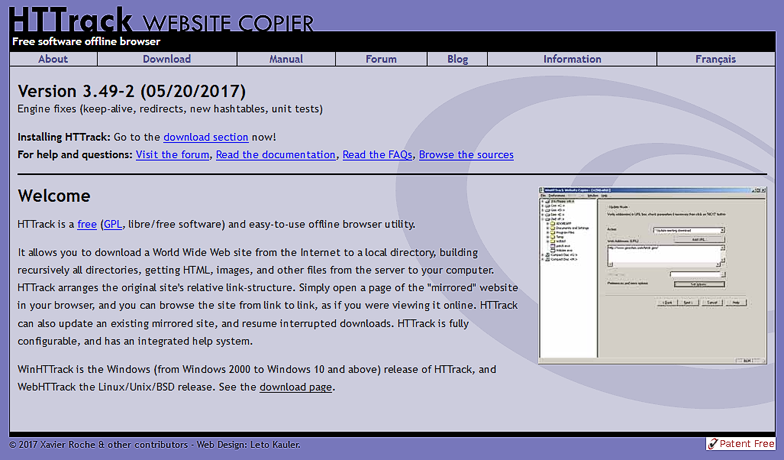

HTTrack’s home page says the following:

HTTrack is a free (GPL, libre/free software) and easy-to-use offline browser utility.

It allows you to download a World Wide Web site from the Internet to a local directory, building recursively all directories, getting HTML, images, and other files from the server to your computer.

Go check out their site here: http://www.httrack.com/

To save others time: httrack is command line only by default, and doesn’t easily support logins. No GUI is available for RPM-based distros. The Qt front-end appears to be unmaintained (and is also unpackaged).